Entropy for beginners

ENTROPY FOR BEGINNERS.

In textbooks of thermodynamics the function of state ‘ENTROPY’ can be approached from first principles, making the study of thermodynamics well accessible.

CONSIDERATIONS

Typically textbooks on thermodynamics begin with a discussion on energy and a statement of the First Law of Thermodynamics, followed by a discussion on the Second Law and the concept of Entropy. Subsequently the results of both laws are applied to understand what happens when an ideal gas is compressed or expanded, what drives these gases to mix with other ideal gases, how chemical equilibrium is achieved, how water condenses and crystallises, how trees transport water up to the highest leaf and how their roots damage underground piping and roads, how motors, refrigerators and heat pumps work, and so on.

In our present discussion we will take a closer look at the definition of entropy and the Second Law of Thermodynamics.

Entropy is one of various functions of state characterizing the equilibrium of a physical system. Historically, the introduction of entropy has been confusing. It started by stating: “For any physical system a function S exists, called “entropy”, which increases after a

small heat supply dQ according to:

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .(1)

So stated, without any reference to basic principles within real physics, this definition is weak and open to interpretation, leading one to seek its roots somewhere else. In addition, the definition is critically limited in various ways. It cannot for instance, adequately explain the entropy of mixing, implying a fundamental defect. These and other limitations are sufficient cause to abandon the classic approach.

An alternative approach, more recently developed, involving wave mechanics and known as Boltzmann’s statistical approach, does connect with first principles and does not have any of the limitations of the classical definition.

Today, in scientific and technical communities, the application of thermodynamics is mainly based on the latter approach. Not so in education. Teachers persist in starting with the classical approach (also called: 'calorimetric' or 'phenomenological' approach), only afterwards introducing the statistical method. (see for instance w:Entropy) In our opinion this confuses students and does not contribute to clarity of scientific thought.

In many textbooks, a straightforward introduction to statistical principles is burdened by mathematical baggage, making the approach rather difficult, but in our opinion it is possible to explain entropy more accessibly. As the molecular character of matter is a common feature in the secondary school elementary physics curriculum, and some discussion of wave mechanics is usually also included, the notion of entropy can and should be presented with the statistical approach presented below.

In the next paragraphs we will encompass a text, serving as a model how the chapter introducing entropy can be adapted, almost without mathematics. Besides is shown that this definition is in accordance with the historical one and is demonstrated how a link to the following chapters of thermodynamics can be made.

This introduction begins with the discussion of ‘quantum states’.

THE EXISTENCE OF QUANTUM STATES

Functions such as temperature, pressure, volume, the content of matter and so on, characterise the ‘state’ of a physical system. For all common experiments the value of these ‘functions of state’ is remaining constant in equilibrium, although on an atomic scale the situation is changing perpetually. For instance in a gas, after each collision of atoms, a new situation is originated without any change of temperature, pressure or volume, giving rise to a long sequence of different ‘states’. For further discussion we will call these states envisaging atomic motion: ‘microstates’, applying the term ‘macrostates’ to the mentioned state, being defined with its temperature, volume, pressure and so on.

Taking the line of classical mechanics, the number of microstates in a gas is not well-defined: say, any atom in a box, moving along the x-coordinate from: x0 to xE, can have velocity v. This v can have an unlimited number of values, likewise at a definite moment the atoms location can be anywhere between: x0 and xE. Consequently in classical mechanics the moving atom could be in an undefined number of possible ‘microstates’.

However, in the early twentieth century, with the rise of quantum physics this view on mechanics appeared to be too simple. The conclusion has been that the number of microstates in any physical system is well-defined, this number may be large, very large, but not infinite.

In this new theory, the discrete microstates are called ‘quantum states’.

Quantum mechanics

The word quantum originates from a conclusion, Max Planck has drawn about the character of light. In the nineteenth century the wave-theory of light was generally accepted since it could grasp ‘all’ experimental facts.

Nevertheless in 1900 Planck arrived at experimental facts not compatible with the wave-theory, when he was studying radiation as emitted by ‘black bodies’ and the frequency distribution of this radiation. He was forced to the conclusion that the radiation-energy of black bodies exists in discrete quantities, ‘wave-parcels’ (‘quanta’). Not only the black body radiation, but all light and other electromagnetic radiation appeared to consist of such wave-parcels being both particle and wave.

This view has set in motion a train of considerations, in the years from 1900 up to 1930 a complete new physics has been developed.

The classical concept of atoms to be miniature billiard-balls appeared to be untenable, atoms turned out to have in many respects the character of wave-parcels analogously to light quanta.

In the old physics, as based on classical (Newtonian) mechanics, any object can be taken at hand to observe its dimension, weight and other properties. Its motion is a notion quite apart from these properties.

Waves, on the contrary, do not exist without their motion, in quantum mechanics the existence and the motion of a particle are connected aspects of the same thing.

Obviously, after centuries of successful application of classical mechanics, the conclusion, that it is considered to be obsolete and must be replaced by a fairly ‘absurd’ type of mechanics, has met doubts in the scientific community. Fortunately the results of quantum mechanics and classical mechanics appear to be corresponding mutually in many cases. Especially in systems of common proportions at normal temperatures, the results of both theories agree. This conclusion, leaving preceding results of classical mechanics fully intact, is called the correspondence principle’’.

Freely moving atoms

In those cases where the results of classical and wave mechanics are corresponding closely, one can freely choose to apply one of both theories on the same object, but in such cases where both theories give different results, the line of quantum mechanics is to be preferred.

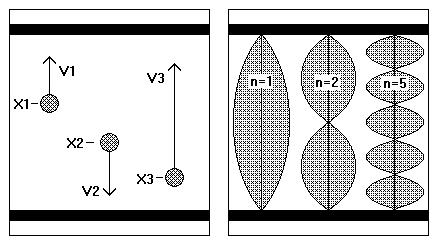

As an illustration one can consider the most simple case, atoms moving in one dimension between two walls. In figure 1 can be seen that in this case the difference between both points of view is very large.

From the point of view of classical mechanics an atom, moving up and down between the two walls, can be at any level x and have any velocity v and consequently the number of different ’states’ would be undefined. On the other hand in quantum mechanics, dealing with standing waves only, the number of possible states is well-defined, states in between being impossible.

Figure 1. Atoms moving freely in one dimension, as considered classically and wave-mechanically.

Both pictures represent a vision on atoms, moving in one dimension between two walls. In classical mechanics the atoms are represented by miniature billiard balls, while in wave mechanics the same atoms are represented by standing waves. In the second case the number of ‘states’ is well-defined, in the first case it is not.

Consequently for the study of microstates we have to resort to quantum mechanics. In the present case the mentioned standing waves are characterised with a wave number n, being a nonzero, whole number. Each of the wave patterns is coupled to its characteristic energy, being proportional to: n^2. (In classical mechanics the energy would be proportional to v^2)

The pattern of standing waves of one particle in one dimension may be very simple, a particle in three dimensions has already a more complicated wave pattern, characterised by three quantum numbers, for instance the numbers: n_x, n_y and n_z in x-y-z space. When the particle is moving in a cubic vessel this three-dimensional wave pattern is coupled to an energy level, proportional to:

.

Obviously, a system with more than one particle, moving independently in a three dimensional volume exhibits still more complicated wave patterns and when the atoms are not free, but touching each other in liquids or in crystals, a still more complicated pattern of concerted waves exists, always well-defined.

THE NUMBER OF QUANTUM STATES AND ENTROPY

Mathematically the quantum number n has no upper limit, but since in real systems energy has always its upper limit, the magnitude of the quantum numbers has its upper limit likewise.

When for instance we suppose the upper limit of the quantum number to be: n = 10, the number of possible wave patterns with one-dimensional motion would be ten likewise. For one particle with three dimensional motion the number of wave patterns would become one thousand and for two particles in the same volume, it is a million. In this line of reasoning, for a common system with N particles in three dimensions, the number of possible wave patterns would become: , which is very large and always well defined.

For real systems, as worked with in laboratory and in technical contexts, this calculation is not simple: the upper limit of the quantum numbers is always much greater than 10 and moreover, the number of particles (atoms) is generally large, more than .

Nevertheless the calculation is possible: already in 1916 Sackur and Tetrode developed a sophisticated formula to calculate the number of quantum states available in an ideal gas, supposing all atoms to be moving independently from each other in a well defined volume (see w:Sackur-Tetrode equation), an important expression of wave mechanics. The formula implies relevant corrections, the most important being a strict discrimination between identical and different atoms, present in the system. When two different atoms are interchanged, a new quantum state is brought about, but this is not the case when two identical atoms are interchanged.

(This strict discrimination brings about the phenomenon of ‘mixing entropy’, eliminating a contradiction, existing in classical thermodynamics, called: the ‘Gibbs’ paradox’.)

The result of calculations with the Sackur-Tetrode formula is remarkable: e.g. in 1 mole of argon, at a temperature of 300 K and a pressure of 1 bar (approaching closely the ‘ideal gas’), the number of ‘available’ quantum states turns out to be:

. Very much indeed, but not infinite.

Numbers of this size quite surpass our understanding, and are difficult to manage for any calculation. For calculations it would be sufficient to take to the exponent: , being the ‘logarithm’ of g (in formula: log(g)) and to multiply it with a suitable small factor. In thermodynamics this is common practice, while for convenience the ‘natural logarithm’ (ln(g)) is chosen, which is about 2.3 times as large as the mentioned log(g).

The number, ‘tamed’ in this way is called ‘entropy’, indicated with the symbol S, in formula:

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . (2)

The mentioned ‘suitable’ factor k is always the ‘Boltzmann constant’, sizing:

e.u.

(e.u. being ‘entropy units’) .

In words: entropy is a representation of the number of quantum states. With the formula (2), the entropy of the mentioned 1 mole of argon at 300 K and 1 bar can now be calculated to be:

e.u. per mol

THE SECOND LAW

The number of quantum states plays an important role in the settlement of physical equilibrium, for instance the cooling down of a cup of tea, the fading out of a flywheel’s rotation etc.

These numbers of quantum states being very large in physical systems, brings about that any difference between two macrostates of the same system is generally coupled with a correspondingly large disparity of those numbers.

An example is a system with two parts at different temperatures moving spontaneously towards equal temperatures, think of an isolated system with two copper blocks, beginning at the temperatures 299 K and 301 K respectively, moving to the state with both blocks at 300 K.

A relatively simple calculation shows that in the process the entropy increased, to be precise: in the present case it increased with 0.000004 e.u., seeming a small increase, nevertheless being important, since it corresponds with a large disparity of the number of quantum states: the effect of the process comes out to be an increase with the factor:

Energy being constant, all those quantum states have the same probability and the system will wander blindly over the enormous quantity of quantum states of both types ending up (almost) certainly in one of the quantum states of the macrostate with equal temperatures, merely driven by the large disparity of numbers. (Compare with the ‘pixel hopper’ in figure 2.)

Figure 2. The colour-blind pixel-hopper.

This picture has been built up as a coloured area with 100 000 blue pixels and 100 red pixels. When looking sharply the reader may find in the red area one black pixel. Supposing this to be a ‘pixel hopper’, sitting on a red pixel, we can imagine it to wander about by hopping from one (coloured) pixel to the other. All pixels having equal probability, it is obvious that, after some time, it is 1000 times more probable to find the hopper on a blue pixel than to find it on a red one. This move from red to blue can not be ascribed to a mysterious preference driving the colour-blind hopper to blue, the move is merely caused by the disparity of numbers.

For all practical purposes any change to a macrostate with higher entropy can take place spontaneously and its reverse is so improbable that we can not expect to observe it, not even in a billion years.

In thermodynamics we simplify the latter statement to: ‘it is impossible’.

And:

In an isolated system a change with increasing entropy can take place spontaneously and irreversibly.

This statement is the ‘second law of thermodynamics’.

One consequence of this law is that a perpetual motion machine, converting in an isolated system some heat into useful work, is impossible since withdrawing this heat would decrease the system’s entropy while the useful energy produced, brings about no entropy. Another consequence is that a reversible change can not change entropy since decrease is impossible and an increase of entropy would be transposed into a decrease after reversal.

ENTROPY AND ENERGY BALANCING

Finally it seems rather bold to nab the word entropy from the well-established classical thermodynamics, using it for a quite different notion. This cannot be done without a justification.

For this justification must be compared the isolated systems we considered up to now with the non-isolated systems as considered in classical thermodynamics.

In an isolated system its energy is lying between narrow limits, from: up to , taking into account that in thermodynamics dE does not approach zero as in mathematics, since the number of quantum states would approach zero likewise. In thermodynamics it is common practice to speak already of an ‘isolated system’ when dE is small enough to make that all quantum states within the partition have (next to) the same probability. Although being non-zero, the magnitude of such a dE is small, too small to be detected calorimetrically.

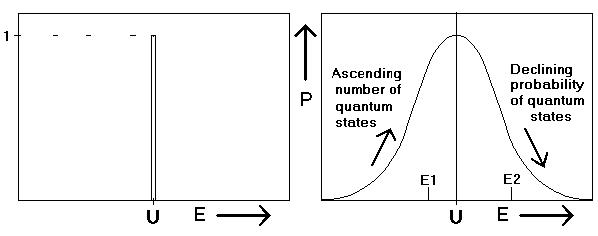

In a non-isolated system being in contact with a heat bath exists a quite different situation: The system’s energy is fluctuating permanently. The bell-shaped curve of the probability P (see figure 3) can be thought to consist of many equivalent partitions with a width of dE joule, while in the isolated state only one partition exists. Consequently the number of available quantum states increases considerably likewise. In a system with atoms the numbers will increase with a factor of more than , leading to a small contribution to entropy being absolutely negligible.

It looks like a miracle: after multiplication with a million or a billion or even a trillion the logarithm of such large numbers does not change for all practical purposes. This miracle makes that one can freely speak of ‘the’ entropy S, whether or not the system is isolated.

In figure 3 one can see that the system’s probability P is characterised by a bell-shaped curve, being the result of two opposing effects: the increase of the number and the decrease of their probability with increasing energy.

It can easily be calculated (see VERIFICATION below) that the level E=U at which the numbers are balancing the probabilities, is just the energy level at which the statistical approach and the classical approach agree, with the consequence that in equilibrium both approaches lead to the same result.

So it is correct to adopt the classical name ‘entropy’ for the statistical notion as we did above.

Figure 3. Isolated system and a system in contact with a heat bath compared.

In both figures the vertical coordinate is the probability of a system to be at energy level E. The left hand picture displays the situation in an ‘isolated’ system, P being unity: the system is always at the energy level U. The right hand picture gives the situation in the same system, being in equilibrium with a heat bath. Due to thermal motion its energy is fluctuating about the mean value U. The fluctuations are so small that they can not be detected calorimetrically, but on an atomic scale they are important.

Two opposing trends, viz. the increase of the number of quantum states and the decrease of their probability with energy make the curve to begin increasing, while it declines at energy above U.

An interesting aspect of the fluctuating energy is the reversibility of changes within the bell-shaped probability curve. When e.g. the system did move spontaneously from level E1 to level E2 in figure 3, one can be sure to find after some time the system to be returned spontaneously to E1. Reversible processes, playing an important role in thermodynamics are chains of such reversible changes.

VERIFICATION

In this chapter we will justify an important statement we made above, viz. the claim that the statistical and the classical approach lead to the same entropy.

As mentioned above one difference between classical and statistical entropy is that the latter has been defined for isolated systems, whereas classically the definition is based on its change after heat supply to a non-isolated system in contact with a heat bath. Consequently we are obliged to compare the non-isolated system with the isolated one to trace eventual agreement between the two definitions.

In contact with a heat bath the system’s energy is fluctuating permanently, due to impulses to and from atoms of the heat bath, see figure 3 above. In the figure the probability to find the system at energy level E is called P, or to be more precise: P·dE is the probability to find the system at an energy level in the interval between E and E+dE. This interval dE can be chosen to be of the same width as the interval we called above a ‘partition’, dividing up the whole function into ‘partitions’.

As mentioned above two opposing effects are determining the probability P and cause its going up and down, as mentioned above . On one hand the number of quantum states is increasing with the energy level, while on the other hand the probability of these quantum states decreases with their energy, according to the so-called Boltzmann factor:

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . (3)

The two effects balance each other exactly at the top, where the P-E curve is passing horizontally:

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . (4)

This formula is the key in comparing the statistical with the classical definition of entropy , viz. from the formula of function P, the quotient (4) can be evaluated by differentiation, leading to the classical formula (1):

dS = dQ/T.

This evaluation can be performed along the following line.

As a starting point can be taken the formula of the probability of the partition between E and E+dE:

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . (5)

The function g being defined in the way that g·dE approaches closely

the number of quantum states, as present between E and E+dE.

B is the Boltzmann factor and Z is the ’partition function’, which is the

normalisation factor, being necessary to achieve that the sum of all

probabilities to be unity:

.

Differentiation of (5) leads to:

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . (6)

which expression becomes zero when the factor:

is zero,

or, since:

:

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . (7)

One consequence of this relation (7) is that the dimension of the ‘entropy unit’ must be: joule per Kelvin, J·K-1. Furthermore can be paid attention to its implication when heat is supplied reversibly to the system.

Heat supply

When dQ joules of heat is supplied reversibly to the system, dQ being more than one partition wide, the mean energy U will increase with: dU = dQ

Supposing dS/dE to be constant during the process of heat supply, we come from (7) to:

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . (8)

and subsequently, since dU=dQ, to:

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . (9)

which is identical with the classical formula (1), with the consequence:

Statistical entropy is identical with classical entropy.

TOTAL DIFFERENTIAL

After having defined entropy, formal thermodynamics and its applications can be performed as usual, considering the mathematical concept of total differentials, implying functions of more than one variable.

When for instance z = f(x,y) is a single-valued function of two independent variables x and y, the function can be plotted in a rectangular coordinate system, the result being a surface. The functions as considered in thermodynamics are so that this surface is always continuous and often slightly curved. Observing the motion of some point x,y on the surface, a small move can be one step dx, parallel to the YZ-coordinate plane, increasing the function z with dz, being the step in x-direction multiplied with the corresponding gradient:

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . (10)

This is called a ‘partial differential’ of the function z. When subsequently a step is made into y-direction, the corresponding increase is added, leading to:

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . (11)

This combined formula is the ‘total differential’ of the function z.

When dQ joules of heat and dW joules of work are supplied to a physical system, the energy is increased with:

dU = dQ + dW . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . (12)

Since Q and W are not functions of state, (12) can not be compared with the total differential: (11), but it is possible to substitute both terms to obtain a relation with the functions of state V and S as independent variables, approaching (11) more close. When thinking of a system consisting of a gas with volume V and pressure P, we can calculate the work to be: dW=-PdV , while formula (9) implies: dQ=TdS and substitute both in (12), with the result:

dU = TdS – PdV . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . (13)

When two conditions are satisfied, this relation (13) would be really a total differential of the function U(S,V), firstly the volume should be kept constant during the heat supply and secondly the entropy of the gas must remain constant during the supply of work. The first condition can be satisfied easily, while the second condition can be fulfilled likewise, viz. when for instance the concerning work comes reversibly to or from a rotating flywheel, the entropy of gas plus flywheel will be constant during this change, since gas plus flywheel together can be considered to be an isolated system. Consequently the entropy of the gas will be constant during the change of state, and the conditions to be a total differential are satisfied. So (13) can also be written as:

. . . . . . . . . . . . . . . . . . . . . . . . . . (14)

From this can be concluded:

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . (15)

and:

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . (16)

At this point must be stated that the above statement of ‘work involving no entropy’ is not qualitative but quantitative. Quantum mechanics demands that any rotating mass, molecule or wheel, brings about its quantum states, resulting in a contribution to entropy. This contribution can be calculated with the result that for rotating macroscopic objects it appears to be absolutely negligible.

Starting with the relations (14), (15) and (16) the properties of total differentials can be used to discuss the Carnot cycle, the heat pump, chemical equilibrium and all other relevant topics of thermodynamics, exactly as is done in all textbooks. In this way the intention of this text has been attained.